flow-run: LLM Orchestration, Prompt Testing & Cost Monitoring

Introduction

Over the past couple of years, I've been observing a trending phenomenon on X (Twitter): "build in public." Developers building products share screenshots, code snippets, and progress updates from their projects, posting them with the hashtag #buildinpublic.

While this trend is fascinating, the projects being showcased are typically closed source and proprietary. I believe that #buildinpublic should be truly public, with projects being open sourced from day one.

That's why I'm excited to announce my new open source project flow-run, which I'll build completely in public and document every step of the journey. The source code will be available on GitHub from the very first day of development.

The idea for this project was inspired by my previous product

ai-svc, developed for AI Founder, which I described here: Building ai-svc: A Reliable Foundation for AI Founder

Context

AI Engineering is an emerging trend, much like building in public, and we're seeing an explosion of AI-native applications being released. While I enjoy building AI-native applications myself, they all share common challenges:

- LLM providers lack reliability (I previously published an article exploring this issue)

- LLM prompt development differs fundamentally from classical programming, yet modern approaches tightly couple prompts with application code

- AI application frameworks are limited to specific languages like Python or TypeScript, restricting the choice of programming languages for efficient application development

Drawing from my infrastructure experience, LLM integration resembles an infrastructure component rather than an application component. Prompts are remarkably similar to SQL queries - we have a dedicated engine (the LLM) that executes prompts, and we send requests to it. So why should we restructure our applications to embed prompts directly in application code when this is fundamentally an infrastructure concern?

The same principle applies to prompt versioning. While prompts resemble SQL, they're far more complex. It's insufficient to run only integration and load tests to verify functionality. With prompts, we need evaluation tests to ensure new versions perform better than previous ones. Embedding prompts within application code makes these tests unnecessarily complex.

Through reflection on these challenges, a product idea crystallized:

- What if we treated prompts as code, similar to Infrastructure as Code tools like Terraform?

- What if prompt execution was completely decoupled from application execution, with applications simply calling an execution engine?

- What if prompt developers could focus exclusively on prompt development, evaluation, and deployment?

User Stories

Let's define the scope and requirements for this project. I'll use the user stories technique to understand user needs and derive project requirements from them.

User Personas

I'm building flow-run for two distinct user personas:

User Persona 1: Prompt Developer

Role: Develop, debug, evaluate, and deploy prompts

Background: Former software engineer with knowledge of building software products and using development tools

Primary Pain Point: No unified approach to prompt development; constantly writing Python scripts for quick testing, implementing workarounds for prompt evaluation

User Persona 2: Application Developer

Role: Develop, debug, and deploy application servers

Background: Software engineer with expertise in building software products and using development tools

Primary Pain Point: Lacks time or specialized knowledge for prompt development; focuses primarily on business logic implementation; existing LLM integrations are unreliable

Prompt Developer User Stories

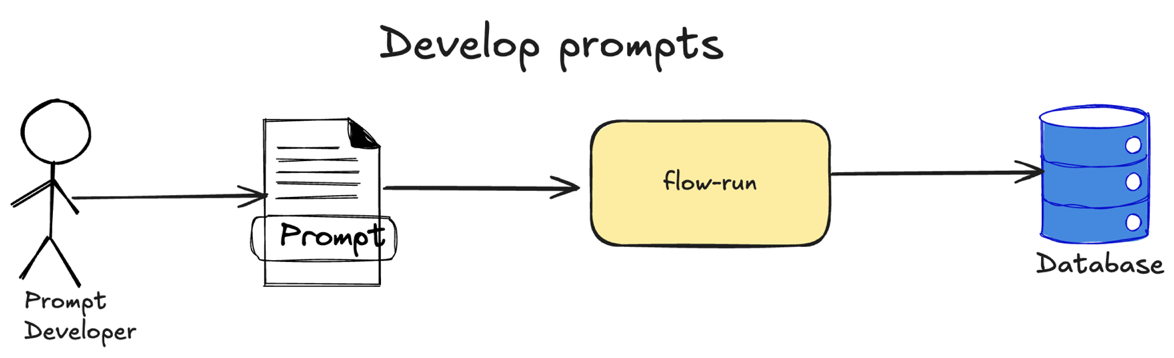

US-1-1: Develop Prompts

As a Prompt Developer, I want to define my prompts without traditional programming languages like Python, while maintaining the benefits of source code versioning (Git) and syntax highlighting (IDE).

Loading...

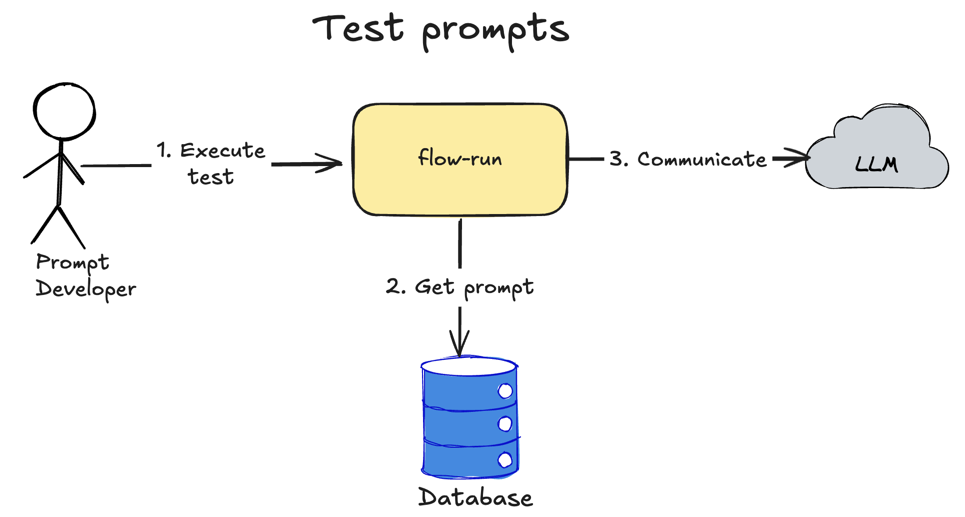

US-1-2: Test prompts

As a Prompt Developer, I want to test my prompts immediately after development without writing custom Python code or waiting for CI builds to execute.

Loading...

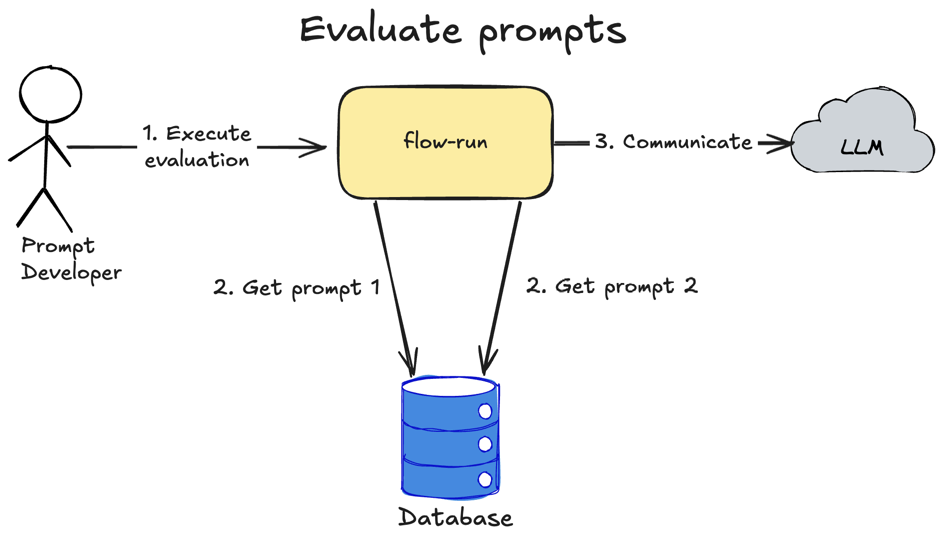

US-1-3: Evaluate prompt versions

As a Prompt Developer, I want to evaluate newly developed prompts against their production versions to ensure the new version performs better than the previous one.

Loading...

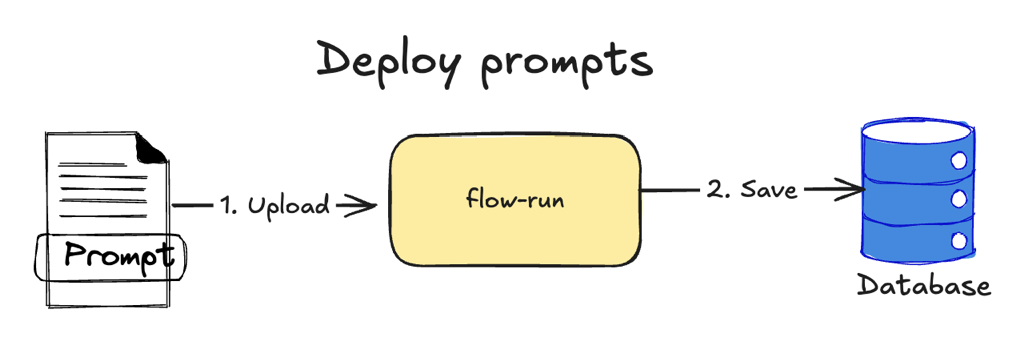

US-1-4: Deploy prompts

As a Prompt Developer, I want to deploy my prompts to dev and prod environments easily and reliably.

Loading...

US-1-5: Automated prompts testing

As a Prompt Developer, I want to automate my prompt testing and run tests in CI after each Git push.

US-1-6: Automated prompts deployment

As a Prompt Developer, I want to automate prompt deployments through CD after each Git push to the main branch.

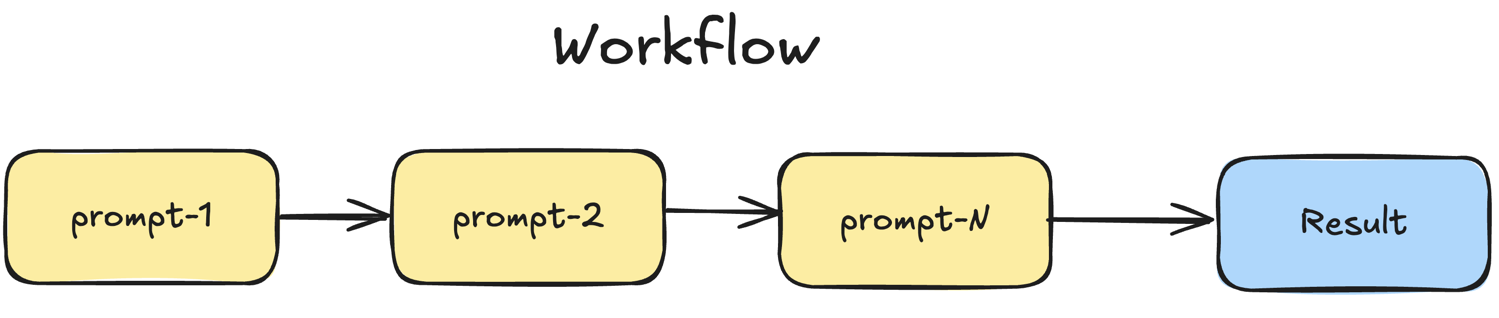

US-1-7: Prompts Workflows

As a Prompt Developer, I want to build workflows with my prompts where each step executes sequentially.

Loading...

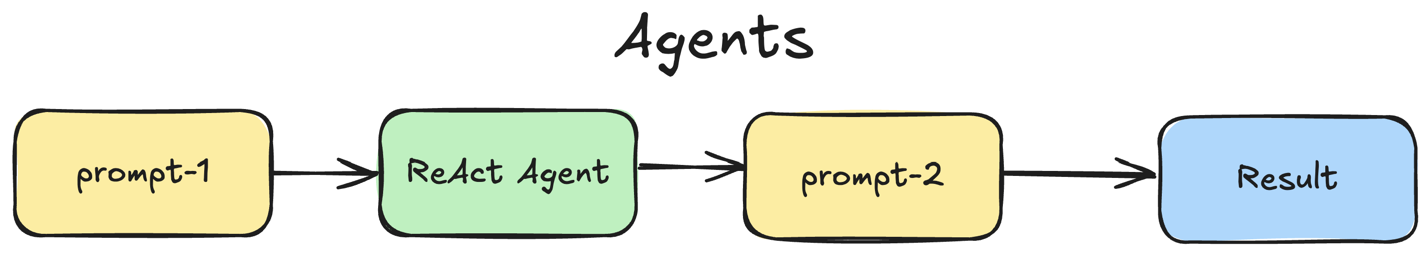

US-1-8: Prompts Agents

As a Prompt Developer, I want to build agents with my prompts and incorporate them into workflows as described in US-1-7.

Loading...

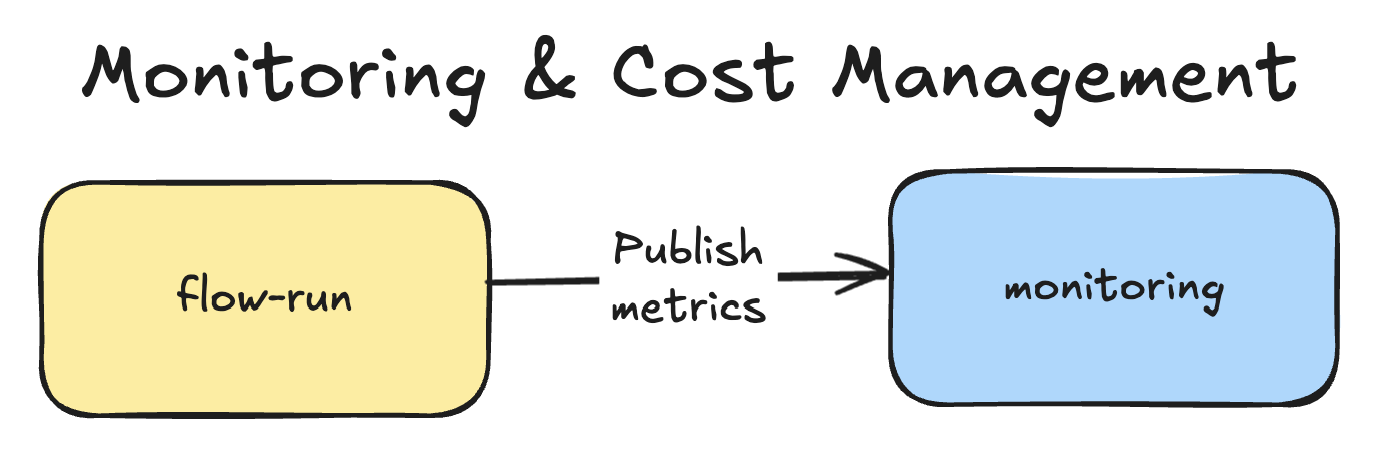

US-1-9: Observability & Costs Management

As a Prompt Developer, I want to monitor prompt execution and track LLM costs.

Loading...

US-1-10: Easy LLM swap

As a Prompt Developer, I want to switch LLM providers easily without extensive code changes.

Application Developer User Stories

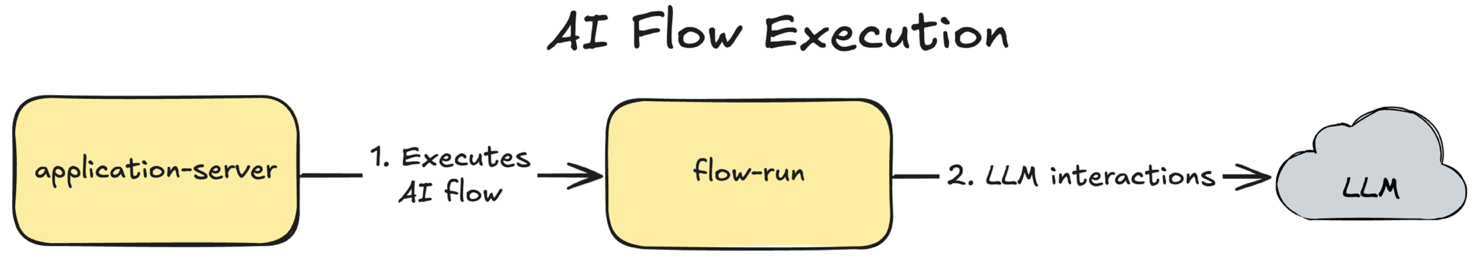

US-2-1: Execute AI Flow

As an Application Developer, I want to reliably execute AI Flows defined by Prompt Developers in the flow-run service to add AI integration to my application.

Loading...

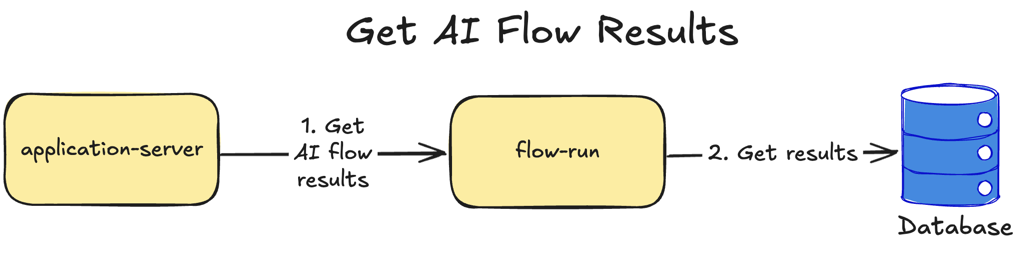

US-2-2: Get AI Flow results

As an Application Developer, I want to retrieve AI Flow results from the flow-run service when they're ready.

Loading...

Project Requirements

Based on the defined user stories, I can establish the following project requirements:

- Reliable execution of AI flows using fire-and-forget semantics with guaranteed execution

- Infrastructure-as-Code approach for prompt development and deployment

- CLI tool for running, evaluating, and deploying prompts

- CI/CD support for prompt testing and deployment

- Support for common AI flow abstractions: tasks, workflows, and agents

- Observability and cost reporting for AI flow executions

- Multi-LLM provider support within the execution engine

Project Roadmap

| Stage | User Story | Description |

|---|---|---|

| v1 | US-1-1: Develop prompts | Enable prompt developers to create prompts |

| v1 | US-1-7: Prompts Workflows | Implement workflow support in flow-run |

| v1 | US-1-4: Deploy prompts | Enable prompt deployment capabilities |

| v1 | US-1-10: Easy LLM swap | Support LLM provider switching from day one |

| v1 | US-2-1: Execute AI Flow | Enable application developers to execute developed prompts |

| v1 | US-2-2: Get AI Flow results | Enable retrieval of execution results |

| v2 | US-1-2: Test prompts | Improve prompt quality in flow-run |

| v2 | US-1-3: Evaluate prompt versions | Enable prompt evolution without quality degradation |

| v2 | US-1-9: Observability & Costs Management | Add monitoring capabilities and cost tracking |

| v2 | US-1-5: Automated prompts testing | Enable CI integration for testing |

| v2 | US-1-6: Automated prompts deployment | Enable CD integration for deployments |

| v3 | US-1-8: Prompts Agents | Implement support for prompt agents |

Roadmap Explanation:

- v1 stage delivers a minimum viable product supporting basic prompt development and workflow execution. This enables applications to begin integrating with

flow-runwithout waiting for full feature completion - v2 stage introduces improvements in prompt testing and observability capabilities

- v3 stage implements AI Agent support, which represents a complex feature requiring dedicated development focus

Conclusions

Thank you for reading this announcement! I'm thrilled to launch this truly public project that I've been contemplating for the past 2-3 years. In upcoming articles, I'll cover the system design and share regular progress updates. All source code will be available on GitHub throughout the development journey.

The idea of current project was inspired by my previous product

ai-svcdeveloped for AI Founder which I described here: Building ai-svc: A Reliable Foundation for AI Founder

📧 Stay Updated

Get weekly insights on backend development, architecture patterns, and startup building directly in your inbox.

Free • No spam • Unsubscribe anytime

Share this article

Related articles

How I Built an AI-Powered YouTube Shorts Generator: From Long Videos to Viral Content

Complete technical guide to building an automated YouTube Shorts creator using Python, OpenAI Whisper, GPT-4, and ffmpeg. Includes full source code, architecture patterns, and performance optimizations for content creators and developers.

Flow-Run System Design: Building an LLM Orchestration Platform

Deep dive into the system architecture and design patterns for flow-run, an LLM orchestration platform. Learn about task flows, execution engines, database schema, API design, and scaling strategies for building production-ready AI workflow systems.