Build AI Systems in Pure Go, Production LLM Course

Learn to build production AI systems in pure Go. Master LLM integration, event-driven workflows, and reliability patterns from Pinterest/Revolut engineer. No Python dependencies required.

Early Bird Special - Save $200!

30-day money-back guarantee • Lifetime updates

Watch the Course Overview

Don't Complicate Your Architecture for AI

Your Go system works. Adding Python AI services means more complexity, costs, and operational overhead.

The Hidden Cost of Python AI Services

Keep It Simple: AI in Your Existing Go System

LLMs are just HTTP APIs. OpenAI, Claude, Gemini - they're REST endpoints that return JSON. Add AI features without changing your architecture.

Business Impact: Lower Cost, Faster Delivery

Operational Savings:

- ✓ No new services to deploy/monitor

- ✓ Leverage existing Go team

- ✓ Single deployment pipeline

What You'll Build:

- 🤖 Intelligent notification systems

- ⚡ Event-driven AI workflows

- 📊 Production-ready decision engines

Real Production System You'll Build

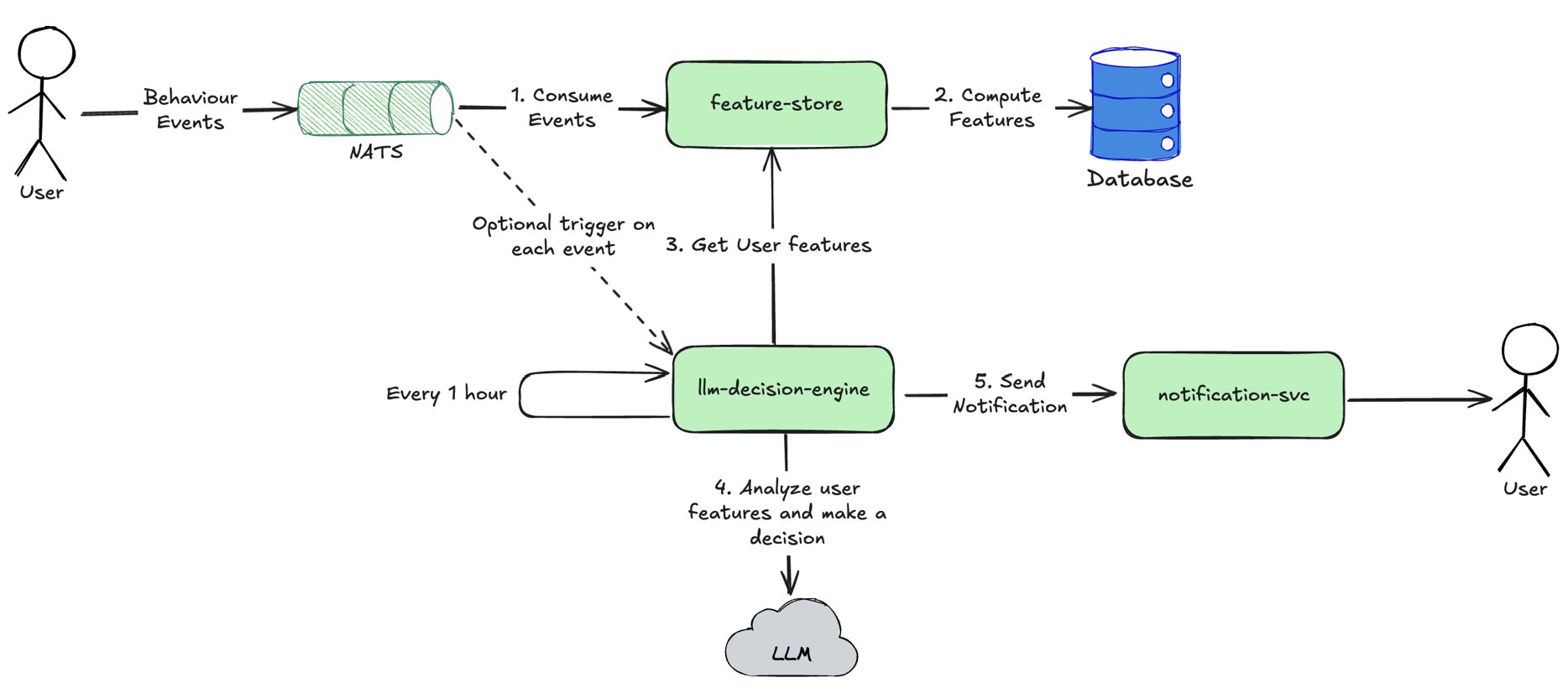

An intelligent notification system that analyzes user behavior in real-time and decides when to send personalized notifications

Event-Driven AI Architecture

5-service architecture handling millions of events with AI-powered decision making

How It Works: Real-Time Intelligence

Event Processing

- ✓NATS streaming

- ✓Backpressure handling

- ✓Event sourcing

- ✓LLM trigger workflows

Feature Engineering

- ✓Real-time aggregations

- ✓Feature versioning

- ✓Caching strategies

- ✓LLM context preparation

AI Integration

- ✓Structured LLM outputs

- ✓Multi-provider support

- ✓Cost optimization

- ✓Fallback strategies

Production Ops

- ✓Prometheus metrics

- ✓Circuit breakers

- ✓Grafana dashboards

- ✓LLM cost tracking

7 Hands-On Production Modules

Build a complete AI system step-by-step, from LLM integration to production deployment

Go-Native LLM Integration

Build production LLM clients in pure Go

What You'll Master:

- ✓Pure Go client implementation

- ✓HTTP client configuration

- ✓Structured outputs for decisions

- ✓Token counting and cost tracking

- ✓Basic error handling patterns

Project Outcome:

High-performance LLM service in Go

Feature Engineering for LLM Prompts

Extract features from events and databases to create intelligent LLM prompts

What You'll Master:

- ✓Feature extraction from events and databases

- ✓Feature aggregation and transformation

- ✓Dynamic prompt template construction

- ✓Feature injection into LLM context

- ✓Prompt optimization with feature data

Project Outcome:

Feature-driven prompt generation system

LLM Patterns & Workflows

Master advanced LLM patterns for complex decision making

What You'll Master:

- ✓The augmented LLM pattern

- ✓Workflow: Prompt chaining

- ✓Workflow: Routing decisions

- ✓Workflow: Parallelization strategies

- ✓Workflow: Evaluator-optimizer loops

Project Outcome:

Multi-pattern LLM orchestrator

Production LLM Decision Engine

Build reliable LLM workflows for business-critical decisions

What You'll Master:

- ✓Prompt quality evaluation techniques

- ✓Model selection for production use

- ✓LLM workflow testing strategies

- ✓Notification decision system implementation

- ✓Quality assurance and reliability patterns

Project Outcome:

Production-ready notification decision engine

Production Reliability Patterns

Build fault-tolerant LLM systems that handle failures gracefully

What You'll Master:

- ✓Transactional outbox pattern

- ✓Event sourcing for AI calls

- ✓Circuit breakers for providers

- ✓Fallback strategies

- ✓Cost controls

Project Outcome:

Bulletproof delivery system

Monitoring & Optimization

Monitor LLM performance, costs, and quality with production-grade observability

What You'll Master:

- ✓Prometheus metrics for LLM systems

- ✓Grafana dashboards for AI workflows

- ✓Cost monitoring and budget controls

- ✓Token usage and latency tracking

- ✓LLM response quality monitoring

Project Outcome:

Complete LLM observability stack

Scaling to Production

Scale LLM systems to handle millions of events with optimal performance and cost efficiency

What You'll Master:

- ✓Horizontal scaling patterns for LLM workflows

- ✓Database optimizations for feature queries

- ✓LLM response caching strategies

- ✓Multi-tenant LLM system architecture

- ✓Production deployment and cost optimization

Project Outcome:

Enterprise-scale LLM notification system

What You'll Be Able to Do

Learn from a Production Systems Expert

Built scalable systems at Pinterest, Revolut, and created Go-native AI tools in production

Vitalii Honchar

Senior Software Engineer

I've built production systems that handle millions of requests at scale. After years of infrastructure work, I saw how AI could enhance these systems—but only if done right. I created this course to teach the infrastructure-first approach to AI that actually works in production.

Production Infrastructure

- Pinterest: Continuous deployment platform

- Revolut: Feature Store at banking scale

- Form3: Financial processing systems

AI Systems Innovation

- Building Go-native LLM orchestration tools

- Published AI + infrastructure patterns

- Consulting on production AI systems

💡 "I teach the patterns that actually work when your AI system needs to handle real traffic, real failures, and real business requirements."

Production-Grade Technology Stack

Master the tools used by top tech companies for scalable AI systems

Real production stack used at Pinterest, Revolut, and other scale-ups

Common Questions About This Course

Everything you need to know before building production LLM systems in Go

Do I need Python or ML background?

Not at all! This course is 100% Go. I treat LLMs as HTTP APIs—if you can build REST services in Go, you can build AI systems. No ML theory or Python required.

Will this work with my existing Go architecture?

Yes! The patterns work whether you have a monolith, microservices, or event-driven architecture. I show how to add LLM features without changing your core system.

Is this production-ready or just tutorial code?

Production-ready. You'll build a complete notification system with circuit breakers, monitoring, cost controls, and reliability patterns used at companies like Pinterest and Revolut.

What about other LLM providers besides OpenAI?

The patterns work with any HTTP-based LLM API (Claude, Gemini, etc.). I start with OpenAI for consistency, then show multi-provider patterns.

How is this different from Python AI frameworks?

Python frameworks add complexity for Go teams. This course teaches infrastructure-first patterns that leverage your existing Go expertise—no new languages or frameworks.

What level Go experience do I need?

Intermediate. You should be comfortable with HTTP servers, JSON handling, goroutines, and basic database operations. If you've built APIs in Go, you're ready.

Still have questions?

Email me at vitaliy.gonchar.work@gmail.com and I'll help you determine if this course is right for your team and project.

Ready to Build AI Systems in Pure Go?

Stop polluting your Go projects with Python. Start building production AI systems that scale.

30-day guarantee • Lifetime updates • Real production system