From SaaS to Open Source: The Full Story of AI Founder

Introduction

I have a project AI Founder which supposed to help people validate business problems before launching new products. Target audience of it are:

- Indie Hackers

- Serial Entrepreneurs

- Startups

But during past months of it's execution I decided to do a pivot and focus on the blog. In this blog post I will explain how I have been built AI Founder and provide Open Source version of it.

AI Founder is now in Open Source and available on GitHub

Landing Page is available here.

AI Founder is available here.

Demo of AI Founder is available here: YouTube

Subscribe to my Substack do not miss my new article 😊

Problem Statement

I'm software engineer and I love to develop my own products but I don't know how to decide which idea is worst to invest my efforts in it. That's why I decided to automate the process of idea validation in AI Founder

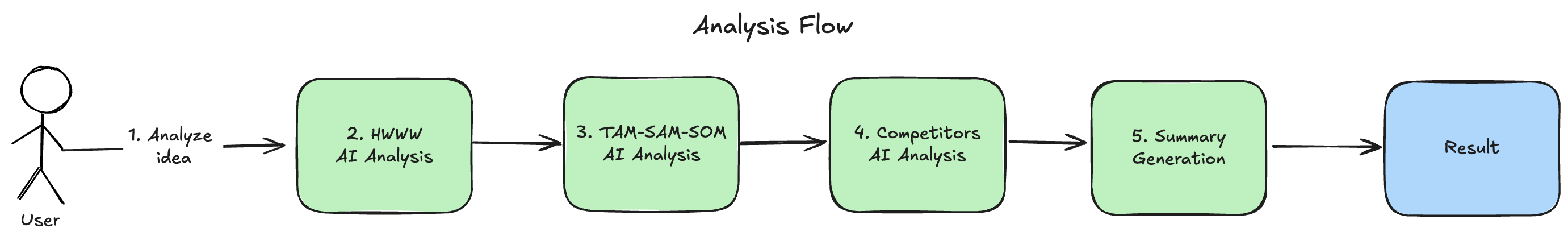

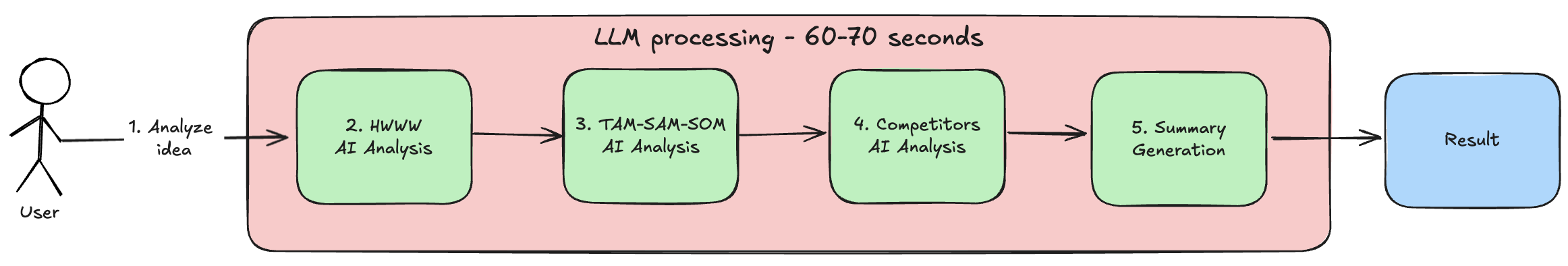

High Level Analysis Flow

Loading...

- User sends an idea to analyze

- AI performs HWW Analysis.

- AI performs TAM-SAM-SOM Analysis.

- AI performs Competitors Analysis.

- AI generates Summary

HWW Analysis:

- How big is this problem?

- Why does this problem exist?

- Why is nobody solving it?

- Who faces this problem?

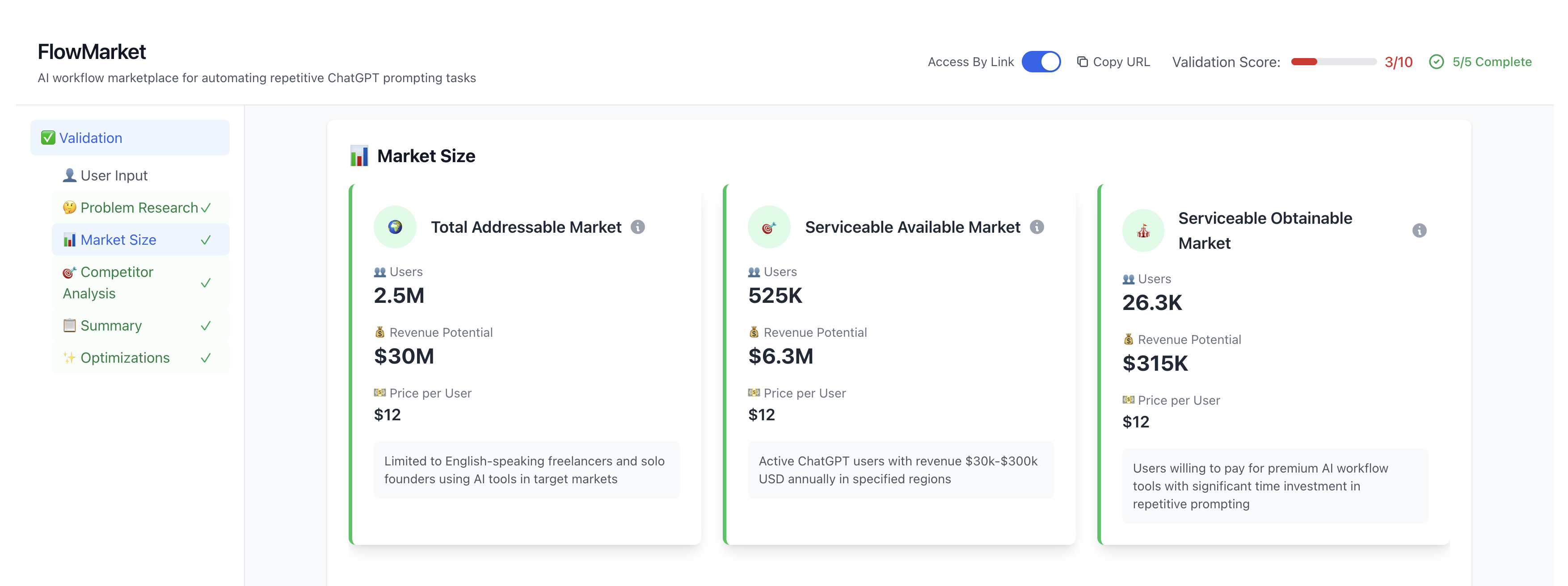

TAM-SAM-SOM Analysis:

- Total Addressable Market

- Serviceable Available Market

- Serviceable Obtainable Market

- Market Landscape

Key take learning here:

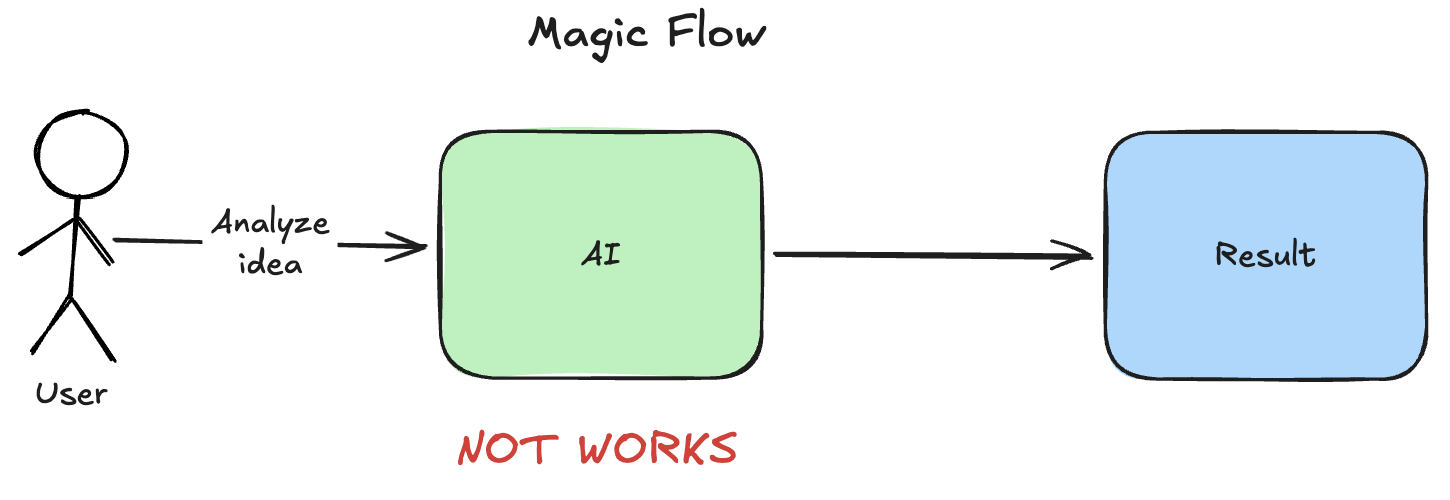

LLM can't perform a good multi step analysis in an one prompt because than analysis is incomplete and LLM misses critical parts of analysis.

That's why it worst to follow Single Responsibility for analysis here and perform analysis by LLM separately to improve accuracy.

That's why my analysis flow contains multiple steps and not:

Loading...

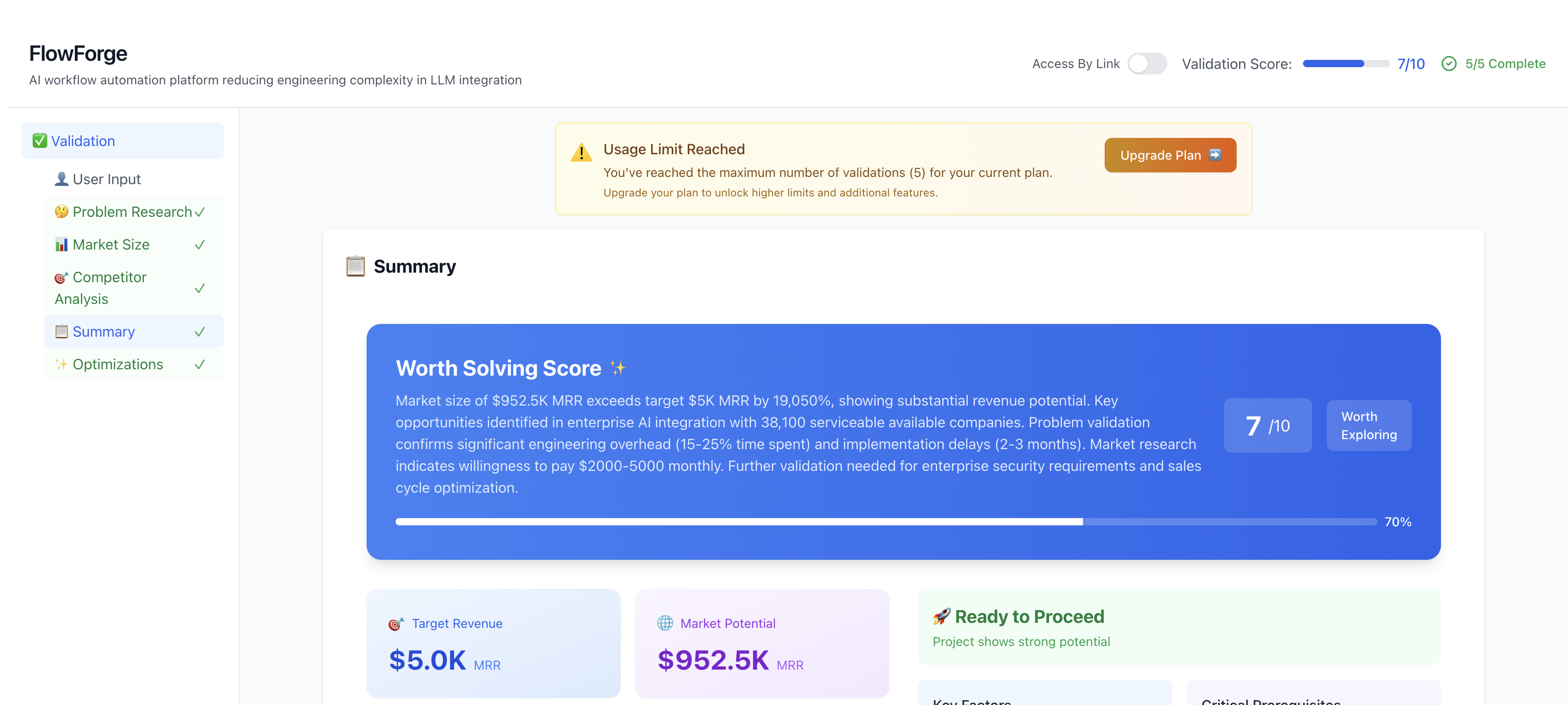

Differentiation Points

While I'm using multistep analysis during a work with AI (LLM) this application still is very easy to copy because my main differentiation points right now are:

- Prompts

- Multistep analysis graph

To make application more unique I decided to bring a good user interface as a third differentiation point.

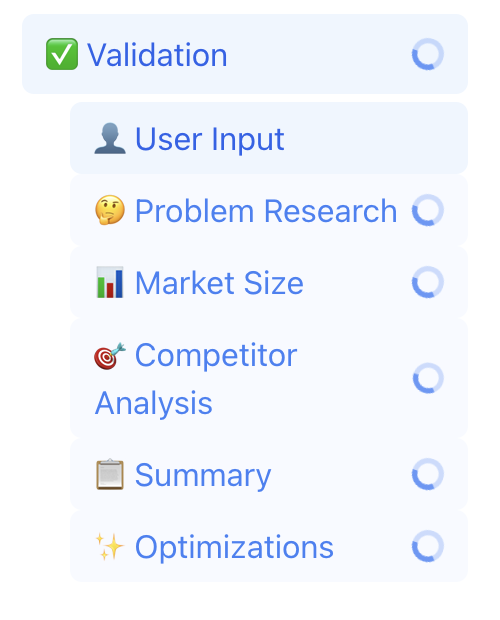

Loading...

So final list of differentiation points are:

- Prompts

- Multistep analysis graph

- User Interface

And the most critical thing which I didn't solve and which eventually become a problem of an application execution is that LLM was a golden source of trust for AI Founder which means that all data used in analysis was from the LLM itself.

I will explain solution for it later in the article.

So generic idea of analysis and differentiation points is clear and let's jump to an implementation part.

Technologies Decision

I decided to use:

- Next.js - to build a good UI with leveraging LLM possibilities which were learned on the huge amount of React code. As a result LLMs can generate a pretty good UI code.

- JavaScript - Next.js is JavaScript framework, so choice was obvious. Also on that times I decided do not use TypeScript to speed up development which was a mistake because project very quickly become complex and next time I will simply use TypeScript.

- Supabase - managed Postgres with additional features like user registration and authorization.

- Claude Sonnet 3.5 - on that times it was one of the best LLM models. I wanted to have a good reasoning during my idea analysis and not too big price for API.

- DigitalOcean - as far as LLM analysis is long process I couldn't use Vercel without paying

$20per month to Vercel. DigitalOcean was significantly cheaper option especially because I know how to build infrastructure. - Cursor - to speed up development I used Cursor Agent which boosted my work a lot. Time to market was reduced in 2x-3x times.

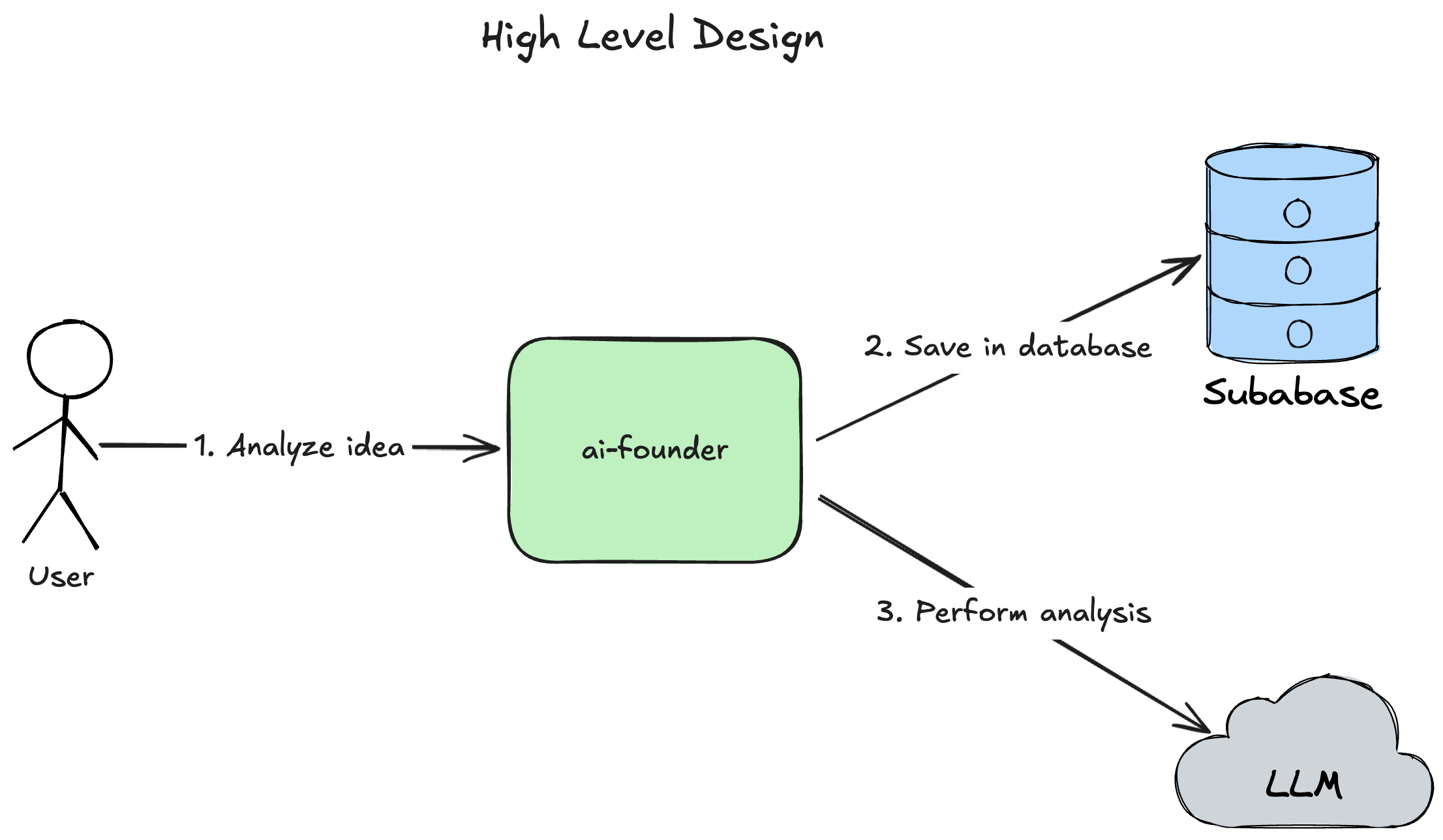

High Level Design

Loading...

- User sends an idea to analyze to

ai-founder ai-foundersaves user idea to the databaseai-founderperforms idea analysis via LLM

Long analysis processing design decision

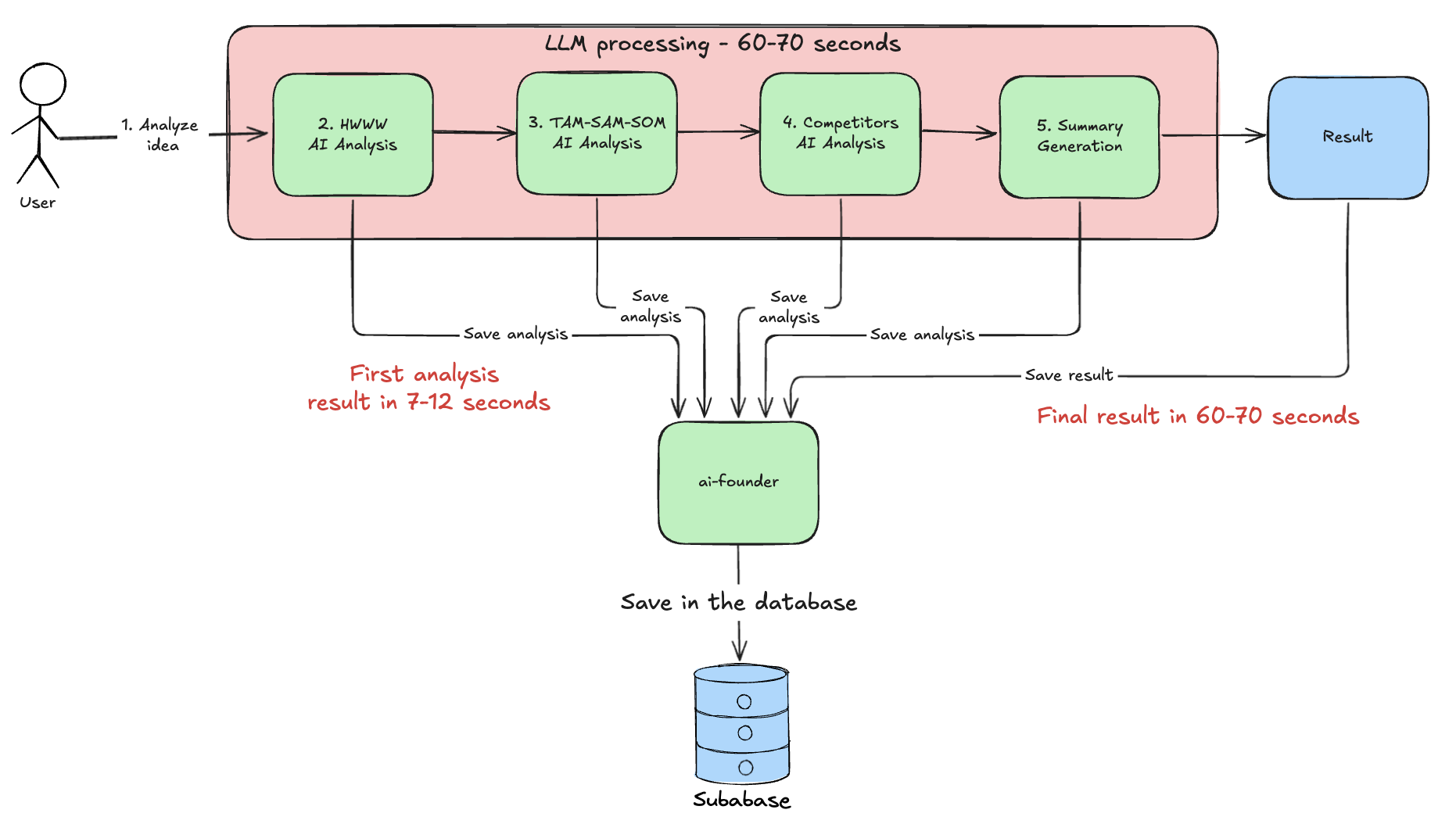

As far as LLM analysis takes some time, to perform the whole analysis of an idea ai-founder took 60-70 seconds.

Loading...

Users will not wait until a page shows loaders during 60-70 seconds. They will just close my application and never open again.

Loading...

That's why I decided to solve this issue with this approach:

Loading...

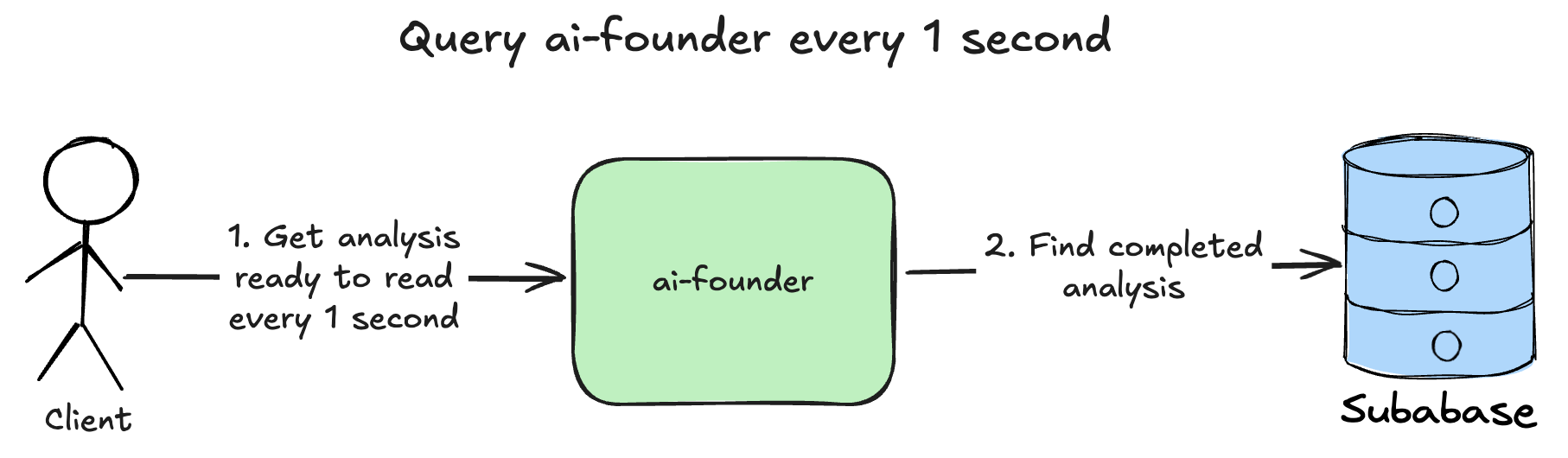

Each analysis saves in the database immediately after receiving from LLM. As far as this is analysis direct graph where each node computes based on the previous one it's possible to save intermediate result in the database and allow client to query ai-founder each 1 second to get first results which user can read in 7-12 seconds instead of 60-70.

Loading...

On the diagram above Client is Web Browser JS client which automatically queries ai-founder, it's not a manual query from a user 😊.

I didn't use websockets here because I was building MVP and I just didn't need real time updates.

So it was main design decisions and let's jump to the implementation.

Implementation

Source code of AI Founder is available on GitHub

Validation Service

Validation Service - component responsible to execute idea analysis and validate how good this idea is.

1const createValidationService = () => { 2 3 const saveValidationInput = async (projectId, request) => 4 projectUpdateService.updateProject(projectId, async (project) => { 5 project.data = project.data || defaultData; 6 project.data.input.validation = request; 7 project.data.tasks = project.data.tasks || {}; 8 project.data.analysis.validation = {}; 9 project.data.tasks.validation = Object.values(validationTasks); 10 }); 11 12 const processAsyncValidation = async (log, projectId, userInput) => { 13 const data = { userInput }; 14 // 4. analyze hwww 15 const hww = await analyzeAndSaveHww(log, projectId, data); 16 data.hww = hww; 17 18 // 5. analyze tam sam som 19 const tamSamSom = await analyzeAndSaveTamSamSom(log, projectId, data); 20 data.tamSamSom = tamSamSom; 21 22 const competitorAnalysis = await generateAnalyzeCompetitors(log, projectId, data); 23 data.competitorAnalysis = competitorAnalysis; 24 25 const summary = await generateSummary(log, projectId, data); 26 data.summary = summary; 27 28 await generateOptimizations(log, projectId, data); 29 }; 30 31 const generateValidation = async (userId, projectId, request) => { 32 const log = loggerWithProjectId(userId, projectId); 33 const startTime = Date.now(); 34 35 if (!request || typeof request !== 'object') { 36 throw new Error('Invalid validation request format'); 37 } 38 39 try { 40 // 1. save user input 41 await saveValidationInput(projectId, request); 42 43 withDuration(log, startTime).info('Starting parallel analysis execution'); 44 // 2. generate name for the idea 45 generateAndSaveName(log, projectId, request); 46 // 3. execute LLM analysis logic 47 processAsyncValidation(log, projectId, request) 48 .finally(() => withDuration(log, startTime).info('Validation analysis completed')) 49 .catch(error => 50 withDuration(log, startTime).error({ error }, 'Initial analysis failed in async context')); 51 return await projectRepo.get(projectId); 52 } catch (error) { 53 log.error({ error: error.message }, 'Validation generation failed'); 54 throw error; 55 } 56 }; 57 return { generateValidation }; 58}; 59 60export default createValidationService();

I omitted the whole validation_service.js file here to simplify understanding and focus on the most important parts. The whole code of this Validation Service is available on GitHub.

The most important steps here are:

- Save initial user input in the database to allow user retrieve analysis from the database when he will need it again.

- Generate name for idea

- Execute LLM analysis logic where each analysis step will be stored in the database.

- Analyze HWWW

- Analyze TAM-SAM-SOM

As you can see in the processAsyncValidation function I have plain old one by one execution instead of a graph implementation. This was a strategic decision to deliver AI Founder faster instead of implementing a graph execution for AI flow. But I still think that representing AI flows as a graph is a good idea, so I evolved it in the separate service ai-svc described in Building ai-svc: A Reliable Foundation for AI Founder. This service will be Open Source as well and I will publish an article about it soon.

Prompts

Prompts - this is special instructions to LLM to perform some actions, for example analysis. It's like code in JavaScript but in English. It's very important to keep prompts clean and explicit otherwise LLM will not provide expected output.

There is an example of prompt which I used to generate HWWW analysis:

1import { validationPrompts } from '@/lib/ai/prompt/context'; 2 3// 1. Prompt itself 4const instructions = ` 5- Perform comprehensive HWW analysis with growth-oriented criteria: 6 - Market Opportunity Assessment: 7 - Validate breakthrough potential 8 - Classify as "Market disruption" vs "Growth accelerator" 9 - Identify innovation opportunities 10 - Evaluate first-mover advantages 11 - Analyze success accelerators 12 - Study scaling opportunities 13 14 - Market Leadership Validation: 15 - Assess premium value potential 16 - Analyze pricing optimization opportunities 17 - Identify rapid adoption catalysts 18 - Map market domination paths 19 - Optimize customer acquisition channels 20 - Document value multipliers 21 22 - Solution Leadership Check: 23 - Validate competitive advantages 24 - Identify scaling opportunities 25 - Map compliance innovations 26 - Optimize launch timeline 27 - Analyze efficiency multipliers 28 - Evaluate market leadership potential 29 30 - Target Demographics Analysis: 31 - Map market domination potential 32 - Document behavioral opportunities 33 - Track engagement accelerators 34 - Identify loyalty builders 35 - Map growth multipliers 36 - Validate premium positioning 37 - Assess revenue optimization 38 - Map decision catalysts 39 - List adoption accelerators 40 41- Use evidence-based validation with breakthrough focus 42- Focus on market leadership metrics 43- Document clear domination paths 44- Maintain balanced yet ambitious assessment 45- Provide readiness to pay and a number for money which users are willing to pay for the solution. 46`.trim(); 47 48// 2. Output schema 49const schema = { 50 "how_big_a_problem_is": { 51 "overview": { 52 "description": "", 53 "size": 0, 54 "dimension": "" 55 }, 56 "frequency": [ 57 { 58 "name": "", 59 "explanation": "" 60 } 61 ], 62 "readiness_to_pay": { 63 "summary": "", 64 "pricing": 0, 65 "researches": [ 66 { 67 "research": "", 68 "explanation": "" 69 } 70 ] 71 }, 72 "persistence": { 73 "duration": "", 74 "trend": "", 75 "explanation": "" 76 }, 77 "urgency": { 78 "level": "", 79 "explanation": "" 80 }, 81 "historical_attempts": [ 82 { 83 "name": "", 84 "result": "" 85 } 86 ] 87 }, 88 "why_does_this_problem_exist": { 89 "summary": "", 90 "reasons": [ 91 { 92 "name": "", 93 "explanation": "" 94 } 95 ] 96 }, 97 "why_nobody_solving_it": { 98 "summary": "", 99 "reasons": [ 100 { 101 "name": "", 102 "explanation": "" 103 } 104 ] 105 }, 106 "who_faces_this_problem": { 107 "summary": "", 108 "metrics": { 109 "characteristics": [ 110 { 111 "name": "", 112 "value": "" 113 } 114 ], 115 "geography": [], 116 "psychology_patterns": [ 117 { 118 "name": "", 119 "value": "" 120 } 121 ], 122 "specific_interests": [], 123 "habitual_behaviour": [ 124 { 125 "name": "", 126 "value": "" 127 } 128 ], 129 "trust_issues": [ 130 { 131 "name": "", 132 "value": "" 133 } 134 ], 135 "where_to_find_them": [ 136 { 137 "name": "", 138 "value": "" 139 } 140 ] 141 } 142 } 143}; 144 145export default validationPrompts(instructions, schema);

So as you can see I have two parts of a prompt:

- Prompt itself which contains instructions in English.

- Output schema which LLM should produce. It contains field names and empty values for types to give a clue for LLM what type need to use in a field.

Another prompts used in AI Founder are available on GitHub

UI Development

AI Founder is available here.

As I mentioned above UI became a differentiation point in my application so I should to build a good UI. But I'm back-end engineer and I can build UIs which are good for me but not very good for an end user. So to build a good UI I decided to use Cursor in an agent mode with some prompts like:

Use TailwindCSS and Next.js to build idea analyser page which will contain:

- HWW

- TAM-SAM-SOM

- ...

This page should have a beautiful UX and be mobile friendly

By making some amount of iterations Cursor has been built a beautiful UI for me:

Loading...

Also I added dynamic behaviour like query analysis results by timeout to improve UX:

1import { useState, useEffect } from 'react'; 2import projecApi from '@/lib/client/api/project_api'; 3 4const POLLING_INTERVAL = 3000; 5 6const hasAnyTasks = (tasks) => { 7 if (!tasks) return false; 8 return Object.values(tasks).some(taskList => Array.isArray(taskList) && taskList.length > 0); 9}; 10 11export default function useProjectPolling(initialProject) { 12 const [project, setProject] = useState(initialProject); 13 const [isPolling, setIsPolling] = useState(hasAnyTasks(initialProject?.data?.tasks)); 14 15 const startPolling = (project) => { 16 setProject(project); 17 setIsPolling(true); 18 }; 19 20 useEffect(() => { 21 let timeoutId; 22 23 const pollProject = async () => { 24 if (!isPolling) return; 25 26 try { 27 console.log('Polling project:', project.id); 28 const updatedProject = await projecApi.getProject(project.id); 29 setProject(updatedProject); 30 31 if (hasAnyTasks(updatedProject?.data?.tasks)) { 32 timeoutId = setTimeout(pollProject, POLLING_INTERVAL); 33 } else { 34 setIsPolling(false); 35 } 36 } catch (error) { 37 console.error('Polling error:', error); 38 if (isPolling) { 39 timeoutId = setTimeout(pollProject, POLLING_INTERVAL); 40 } 41 } 42 }; 43 44 if (isPolling) { 45 timeoutId = setTimeout(pollProject, POLLING_INTERVAL); 46 } 47 48 return () => { 49 if (timeoutId) { 50 clearTimeout(timeoutId); 51 } 52 }; 53 }, [project?.id, isPolling]); 54 55 return { project, startPolling, setProject }; 56}

The code above uses React hooks to query analysis for a project every 3 seconds and with it I can achieve dynamic feeling for my end users.

Also there a lot of interesting code solutions in this project, I can't cover them all but code is on GitHub so you are free to check it.

Deployment

I deployed this AI Founder project in Digital Ocean simply by using docker-compose with this configuration:

1services: 2 swag: 3 image: linuxserver/swag 4 container_name: swag 5 cap_add: 6 - NET_ADMIN 7 environment: 8 - PUID=1000 9 - PGID=1000 10 - TZ=Etc/UTC 11 - URL=<url> 12 - EXTRA_DOMAINS=<extra_url> 13 - VALIDATION=http 14 - EMAIL=<email> 15 volumes: 16 - ./swag/config:/config 17 ports: 18 - 443:443 19 - 80:80 20 restart: unless-stopped 21 networks: 22 - web 23 aifounder: 24 image: weaxme/pet-project:ai-business-founder-latest 25 container_name: aifounder 26 environment: 27 - NEXT_PUBLIC_SUPABASE_URL=<supabase_url> 28 - NEXT_PUBLIC_SUPABASE_ANON_KEY=<supabase_key> 29 - ANTHROPIC_API_KEY=<antropic_key> 30 - NODE_ENV=production 31 - BASE_URL=<url> 32 - NEXT_PUBLIC_API_URL=<api_url> 33 - STRIPE_SECRET_KEY=<stripe_secret_key> 34 - STRIPE_HOBBY_PRICE_ID=<stripe_price> 35 - STRIPE_PRO_PRICE_ID=<stripe_price> 36 restart: unless-stopped 37 networks: 38 - web 39 healthcheck: 40 test: ["CMD", "curl", "-f", "http://localhost:3000/api/health"] 41 interval: 30s 42 timeout: 10s 43 retries: 5 44 start_period: 30s 45networks: 46 web: 47 external: false

linuxserver/swag- for proxy managementweaxme/pet-project:ai-business-founder-latest- AI Founder docker image

Important tips for deployment of an indie project in docker-compose:

- Need to always define

healthcheckendpoint to allow Docker restart a container if something happened like OOM or other problem with a service. Also this endpoint allows to quickly see service status in thedocker psoutput. - Docker Hub allows to host only one private repository for docker images for free which means that if I have multiple projects I need to buy premium plan on Docker Hub. But if use docker image tag as not version but as service name like I did:

weaxme/pet-project:ai-business-founder-latest, Docker Hum allows to host infinity number of pet projects on the free plan. Because image tag is a service name and version instead of docker registry policy to keep service name before image tag.

Key Learnings

The AI Founder product wasn't a product which earned some money but it provided me valuable learnings which I will use in my next developments.

Technologies Decisions

After developing a project I would like to change these things next time:

- JavaScript -> TypeScript - I decided to use JS instead of TS on the start of a project to speed up development but it was a huge mistake because a project very quickly became complex and types would simplify development a lot. So next time I will simply use TS from the start.

- Supabase -> Postgres - I decided to use Supabase to minimize infrastructure configuration by myself but it was a huge mistake. I did a vendor locking with very strict limits provided by Supabase and that flow for user registration simply didn't work and simple so well as I expected. Next time I will just use Postgres and implement user registration flow by myself. Even more I already configured for myself infrastructure in VPS which is cheap and reusable without any strict vendor limits.

- Digital Ocean -> Hetzner - while Digital Ocean is pretty good and significantly cheaper than Vercel or AWS I found that Hetzner is cheaper than Digital Ocean. And I already built an infrastructure for myself in Hetzner for my next projects.

Product Idea Validation

While I was working on the product to validate business idea I validated an idea of this product with Custom GPT - Product Idea Analyzer which I developed in June 2024. It showed promising results and I didn't check anything. This was huge mistake because users just didn't see any value in my product for which them are ready to pay.

I learned that statistical data is not enough to validate product idea and I should speak to clients first. In March 2025 I already tried to sell my AI Founder for 1 month and I realized that I'm doing something wrong that's why I started learning at the ID Accelerator program. It was one of the most important product knowledge which I acquired during last time.

I shouldn't build anything first, but I should speak with real clients and only after that I should start prototyping a solution

This is completely different approach to build products from my own:

- Build product

- Try to sell

- Fail

- Repeat

In this new approach everything becomes differently:

- Find customers for interviews

- Speak with customers to identify their problems

- Prototype a solution for customers

- Validate prototype with customers

- Build a product

That's it. Building a new product is not about programming it's about:

- Understanding of customers and their problems

- Marketing

- 20% programming

Product Decisions

The most important learnings:

- Do not build login via

email / passoworduse OAuth2 instead like Google or Facebook. Too much users truncated my app after didn't receive email in first couple seconds which was a common issue on the start. I just disabled requirement to confirm an email. In next product I will use something like Auth0 from the beginning. - Product features should work good and stable. That's where a new project ai-svc was born.

Differentiation points in AI product

I think that to make AI product not prompts should bring value but these:

- Unique data.

- Unique process automation (doesn't have relation to AI but still valid here)

Conclusions

AI Founder become for me a project where I learned a lot about AI Engineering and product building. During it I built another product which supposed to be AI infrastructure project. Also I learned that I did product idea validation in the wrong way previously.

I should act as an entrepreneur not as an engineer if I want to execute a successful product.

It's hard to accept for me, I still love to write a code and configure infrastructure too much. As a result of AI Founder is this technical blog where I will publish my new learnings and journey.

Subscribe to my Substack do not miss my new article 😊

References

- You can access AI founder on GitHub

- Try AI Founder here

- Demo is here

- ID Accelerator - highly recommend if you want to get product building skills.

📧 Stay Updated

Get weekly insights on backend development, architecture patterns, and startup building directly in your inbox.

Free • No spam • Unsubscribe anytime

Share this article

Related articles

How I Built an AI-Powered YouTube Shorts Generator: From Long Videos to Viral Content

Complete technical guide to building an automated YouTube Shorts creator using Python, OpenAI Whisper, GPT-4, and ffmpeg. Includes full source code, architecture patterns, and performance optimizations for content creators and developers.

flow-run: LLM Orchestration, Prompt Testing & Cost Monitoring

Open-source runner for LLM workflows: orchestrate prompts and agents, run regression tests, control costs, and publish metrics for observability and CI/CD.